The Ghosts in the Machine: Inside AI’s Hidden Human Trauma 🫥

Behind every clean, seamless AI tool lies an invisible workforce paid pennies to wade through humanity's darkest content. This is the human trauma powering the machine-and the reckoning we can no longer delay.

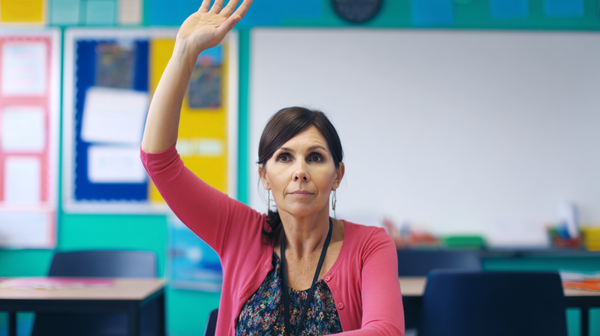

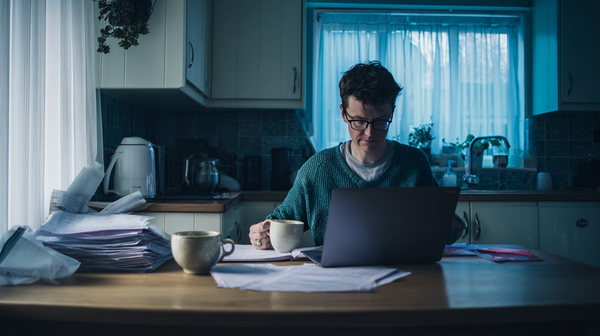

A young man sits hunched over a desk in Nairobi, his face lit by the cold glow of a computer screen. On it flicker paragraphs describing the sexual assault of a child, blow by excruciating blow.

He earns $1.32 an hour so that you can ask ChatGPT how to write a wedding speech-or how to code a website-without the machine spitting back racial slurs or pornographic threats.

This is the hidden human cost of AI content moderation: for every slick chatbot, every algorithm that claims to "understand" language, there are tens of thousands of people paid pennies to sift through humanity's darkest thoughts, labelling them, moderating them, and feeding them into the ever-hungry maw of large language models.

We talk endlessly about AI hallucinations-when systems fabricate facts, spew misinformation, or produce gibberish. But we almost never talk about the hallucinations suffered by the people who train it.

Nightmares of beheadings. Flashbacks of sexual torture. Emotional numbness from staring into the abyss so the rest of us never have to. This is the hidden trauma behind the rise of generative AI, a cost measured in mental health crises and silent suffering.

And as ChatGPT's latest model is being rolled out to the platform's 800 million users, it feels like a moment we can't afford to let slip by. We need to reckon with the human cost that got us here - and the shadow it casts over what the next wave of AI might demand.

Because the truth is urgent and simple: machines don't build themselves. Humans build them. Humans train them. And when those machines misfire, replace jobs, or erode trust in reality itself, it's ultimately humans who suffer the consequences.

The Scale of the Invisible Workforce 😶🌫️

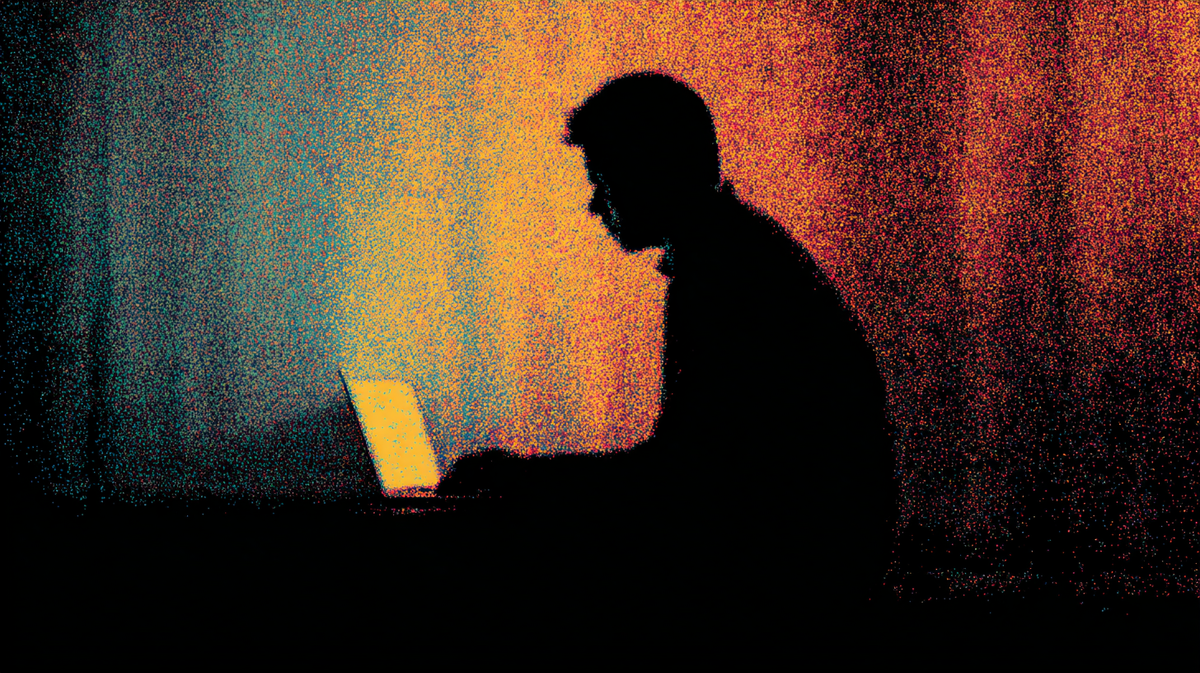

It's easy to imagine that artificial intelligence simply trains itself, crunching data in endless silicon silence.

But behind every AI system: from chatbots to content filters, stands a hidden workforce numbering in the tens, perhaps hundreds of thousands. These are the people who perform AI data labeling, annotation, and content moderation: the tedious, grueling tasks of reading, tagging, and classifying the internet's raw sewage so that algorithms can learn to distinguish wedding vows from hate speech, or harmless queries from violent conspiracies.

Many of these workers live in the Global South. They work from cramped apartments in Nairobi, Manila, Bogotá, places where a dollar stretches further, and where tech giants can quietly hire armies of people for a fraction of Western wages.

Their existence is obscured by layers of subcontracting. A Silicon Valley firm contracts a data services company; that company hires local agencies; the local agency hires "independent contractors." In the end, the names on the billion-dollar AI patents are American or European. The trauma, however, is outsourced.

And so is the silence.

Many workers are bound by strict Non-Disclosure Agreements (NDAs), legally muzzled from speaking publicly about what they see or how it affects them. The human cost of AI becomes invisible-erased behind corporate statements about "innovation" and "safety."

A few stories have slipped through. In Kenya, employees of Sama were paid as little as $1.32 an hour to sift through some of humanity's worst horrors to help train OpenAI's systems. In the Philippines, moderators working for firms contracted by Appen and other AI data labeling giants report constant exposure to graphic violence and sexual content, with little mental health support beyond the occasional wellness webinar.

These people are the ghost workers of AI. Without them, your chatbot doesn't know how to avoid hate speech. Your social feed doesn't stay "brand safe." And your digital world remains, at least superficially, clean.

What These Workers Actually See 👀

So what, exactly, does the hidden workforce behind AI see each day? It's easy to say they moderate "inappropriate content." The reality is far more harrowing.

Day after day, these workers scan and label some of humanity's most disturbing creations. Categories include:

- Graphic sexual violence, including child sexual abuse material.

- Videos and images of beheadings, torture, and executions.

- Neo-Nazi manifestos, racial slurs, and detailed instructions for hate crimes.

- Suicide notes, or step-by-step guides for self-harm and methods of killing oneself.

In one account from Time's investigation, a Kenyan content moderator described being required to read hundreds of pieces of sexual violence content in a single shift, some involving minors. Another worker quoted by The Verge spoke of "images of people getting their heads cut off...that I can't get out of my mind."

Some of the text and video snippets arrive with clinical labels-"CSAM" (Child Sexual Abuse Material), "extreme violence," "hate threat." But such euphemisms are a thin shield against the psychic toll of witnessing horrors firsthand.

There's an emotional dissonance to the job. Officially, it's "just data." But the human brain doesn't easily compartmentalize the grotesque things it reads or watches, no matter how many times supervisors tell workers to stay detached.

"It's like the violence lives inside me now," said one former content moderator in The Verge's exposé.

And the exposure doesn't end when the shift does. Nightmares come home with them. Flashbacks strike in the middle of grocery shopping. Some develop a numbness so complete that ordinary emotions-joy, sorrow, desire-feel muted, as though a film lies between them and the world.

This is the hidden trauma of AI content moderation. The invisible ink behind your machine-generated words.

The Hidden Process Behind AI Training 🤖

The below chart is a simplified version of how data is prepared by humans before machines can be exposed to them.

Psychological Fallout: Lives Shattered 💔

Few jobs leave a mark as deep-or as hidden-as moderating the internet's worst content.

Psychologists call it secondary trauma: a condition where people develop PTSD-like symptoms simply from witnessing, hearing, or reading about others' suffering. For AI content moderators and data labelers, the risk is constant and relentless.

Some workers report nightmares in which the violent images they reviewed replay in gruesome loops. Others experience intrusive flashbacks while riding the bus or shopping for groceries. Over time, many describe a numbing of their emotions-a flattening of joy, sorrow, or empathy-because the only way to cope is to feel nothing at all.

Relationships often buckle under the strain. One Kenyan worker said he couldn't touch his partner after reading day after day about sexual violence. Others admit to substance abuse - alcohol, marijuana, pills - to dampen memories they can't escape.

It's not merely about gruesome images. Even endless streams of hateful slurs, threats, or suicide notes can chip away at mental health. Over time, workers begin to see the world as darker, crueler, more dangerous than it might truly be.

"We were told to separate our feelings from the content. But how do you separate your feelings from a video of a child being raped?" one Kenyan worker asked TIME.

Corporate policies often promise "wellness programs" and "mental health resources." In practice, these may amount to generic webinars, shallow counseling sessions, or superficial gestures. Meanwhile, the algorithm demands the same relentless pace: hundreds of pieces of content reviewed per shift, quotas to meet, errors to avoid.

Lawsuits have begun to crack open this secrecy. In Kenya, former Sama employees have sued the company, alleging not only low pay but psychological harm and inadequate mental health support while working on projects for OpenAI. In the U.S., moderators contracted by Cognizant for platforms like YouTube have sued for PTSD and other trauma-related conditions.

AI's hidden trauma isn't some abstract ethical puzzle. It's lived reality; a mental health crisis unfolding quietly behind the sleek interfaces of your apps and chatbots.

The Ethics of the Machine 🧑⚖️

There's a stark moral paradox at the heart of modern artificial intelligence.

We hail AI as a leap forward: a technological marvel poised to write essays, diagnose diseases, or craft the perfect playlist. But beneath this narrative of progress lies a quiet truth: the very systems designed to keep AI "safe" for public use often depend on traumatizing human beings.

The hidden workforce exists because large language models (LLMs) like ChatGPT cannot yet distinguish good from evil on their own. Left unchecked, AI models absorb the internet's worst toxins: hate speech, conspiracy theories, violent fantasies, and risk regurgitating them back at unsuspecting users.

So humans step in. They sift through the sludge, label it, and feed those examples back into training data so that machines learn to recognize and avoid harmful content. This is why your AI assistant politely declines to answer questions about bomb-making recipes or spewing racial slurs.

But it raises a chilling question: Is it ethical to traumatize some people so the rest of us can enjoy clean, seamless interactions with machines?

Beyond trauma, there's a broader human cost. Many of these workers earn poverty wages. They work without health benefits, job security, or adequate psychological support. Meanwhile, the companies whose models they help train soar toward trillion-dollar valuations.

There's also a creeping moral hazard in outsourcing. Tech giants can claim their products are safe while disclaiming responsibility for the human labor required to make them so. The suffering is hidden in distant offices, behind NDAs, labeled merely as "content moderation."

And this ethical reckoning isn't just about compassion. It's about trust. As AI becomes more deeply embedded in our lives-in law, healthcare, media-the public needs confidence not only in the technology but in the fairness and humanity of the systems behind it. "AI systems don't eliminate human labor. They hide it," says Mary L. Gray, author of Ghost Work, a book examining those workers that make the internet go round.

These are the uncomfortable questions that linger beneath the AI boom. And as new models like ChatGPT 5 emerge, they're only growing louder.

Big Tech's Response - or Lack Thereof 🤷

On paper, Big Tech insists it cares deeply about the well-being of the human workers who keep AI safe.

Spokespeople from OpenAI, for instance, have said the company requires contractors to provide "robust mental health services" and fair compensation. Meta and Google regularly tout wellness programs and trauma-informed support for content moderators. Appen, one of the world's biggest data-labeling firms, states publicly that worker welfare is a "top priority."

But talk to workers on the ground, and a different story emerges.

A Kenyan moderator working for Sama on OpenAI data said the only mental health resources offered were generic group therapy sessions, sometimes with dozens of people present, and little space for individuals to speak. Workers described company-appointed therapists as hesitant to discuss workplace trauma in detail, fearing breaches of confidentiality that could jeopardize their jobs.

In the Philippines, moderators working for subcontractors of Appen and other AI data companies told reporters that mental health support was either inadequate or nonexistent, despite daily exposure to violent or sexual content.

Even when corporations speak publicly about "worker wellness," outsourcing creates moral distance. AI companies often shield themselves behind multiple layers of contractors and sub-contractors. By the time a traumatized worker tries to seek help, responsibility has been diffused into a labyrinth of corporate relationships.Despite these revelations, much of Big Tech's official response remains carefully worded and tightly controlled. Companies acknowledge the challenges but stop short of publicly committing to universal standards for pay, mental health care, or transparency in AI data work.

The result is a system where AI appears clean and seamless, while the human cost remains buried in outsourced silence.

The Silence Around It 🤫

For all the horror these workers endure, the world rarely hears their stories. The silence is deliberate and meticulously engineered.

Most content moderators and AI data labelers sign strict Non-Disclosure Agreements (NDAs) forbidding them to talk publicly about their work, the content they see, or the companies for which they toil.

Even beyond legal threats, there's another force at play: shame. Many workers struggle to explain their jobs to friends or family. How do you casually mention you spent your day reading about child sexual abuse or watching beheading videos? The stigma around mental health only deepens the isolation.

"I don't tell my family what I do," said one Kenyan moderator to TIME. "They would think I'm crazy."

And the outsourcing model itself functions as a silencer. When Big Tech pushes this work out to contractors in the Global South, it becomes geographically and culturally invisible to users in wealthier nations. The trauma is effectively exported. The profits remain at home.

There's also the digital privilege at the core of this story. Users in the West enjoy seamless interactions with AI systems. Chatbots politely refuse to answer offensive queries. Social feeds remain mostly "brand safe." Meanwhile, the cost of that cleanliness is borne by workers thousands of miles away. Many earning less than a living wage, absorbing the worst of humanity so the rest of us never have to see it.

"People want AI to seem magical," said Mary L. Gray, author of Ghost Work. "But the magic trick is hiding all the human labor."

The result is an illusion of frictionless technology. We talk about hallucinating chatbots and machine ethics, but we rarely talk about the human beings behind the curtain. About the mental scars, low pay, and quiet desperation that keep AI running.

Until that silence is broken, the hidden human cost of AI remains one of the tech industry's dirtiest secrets.

What Needs to Change 🫸

It doesn't have to be this way. If AI is going to define the future, it's time we reckon with the hidden human cost that powers it, and build safeguards to protect the people behind the machines.

First and foremost, mental health support should be mandatory for anyone working in AI content moderation or data labeling. Not just token "wellness webinars," but real, trauma-informed therapy available privately and without retaliation. Companies earning billions from AI owe this to the workers absorbing its darkest inputs.

Second, pay must reflect the psychological risk. Reviewing graphic violence and sexual abuse is not low-skilled labor. It demands resilience, psychological endurance, and emotional cost. It's time the industry recognized that through fair wages, benefits, and hazard pay for high-risk categories of content.

Transparency is equally critical. Tech giants should publicly disclose how many people perform AI content moderation, what they're paid, and what protections exist. Secrecy benefits corporations, but it keeps the human cost invisible, allowing exploitation to continue unchecked.

Some researchers and advocates argue for global labor protections tailored to digital work. The International Labour Organization has begun exploring standards for platform-based workers, but few guidelines specifically cover AI data labeling or moderation. A global framework could help ensure consistent protections, regardless of where workers live.

There's also hope in technological innovation. Some experts advocate using synthetic data: artificially generated examples of harmful content, rather than forcing human beings to review real-life atrocities. While synthetic data has limitations, it could reduce exposure to the worst material.

And finally, the public needs to care. As consumers of AI, we hold power. Asking hard questions about who trains these models, who moderates our digital spaces, and what it costs them, is the first step toward change.

"We can't let AI's future be built on invisible suffering," said Mary L. Gray. "Human dignity shouldn't be the price of technological progress."

The path forward is clear: treat AI not as pure magic, but as a human enterprise-because behind every machine lie human eyes, human labor, and human pain.

The Crossroads of AI: Trauma, Job Loss, and the Fight for Human Dignity 🛣

The sun is setting over Nairobi as the young man from the start of this story shuts down his computer. He leaves the lab, steps into the evening dust, and walks home with visions of violence and abuse echoing in his mind. The horrors he read today aren't just images on a screen-they've lodged themselves in his psyche, quietly corroding his sense of safety, intimacy, even reality itself.

He's part of the invisible workforce whose trauma made generative AI possible. But he's also part of another, growing human story: people whose livelihoods might soon vanish because of the very machines they helped to build.

As ChatGPT 5 rolls out to an audience of 800 million users, the conversation has shifted from the marvels of AI to the anxieties it triggers. White-collar workers: writers, coders, designers, customer service agents now look at chatbots and wonder: Will I be next?

It's a cruel symmetry. The same technology that demands humans absorb the internet's darkest horrors may, in turn, displace those humans from the jobs they rely on. We've built machines that learn from us, but we've done so without fully reckoning with either the trauma we inflict on the people training them, or the disruption awaiting those who assumed their work was safe.

And so we stand at a crossroads. AI's rise promises efficiency, innovation, and wonder. But it also carries shadows of hidden trauma, job loss, and social upheaval. We cannot allow the illusion of machine magic to blind us to the human cost etched into every line of AI code.

Because we say AI is artificial. But its wounds, and the livelihoods it threatens, are entirely human.

Things we learned this week 🤓

- 😵 Maverick bosses tend to make their employees miserable.

- 😒 Anxious people are less likely to be bullies, say researchers.

- 🥰 Male domestic abuse survivors often face ridicule and skepticism.

- 🙉 Scientists can find no evidence of alpha males in animal groups.

Just a list of proper mental health services I always recommend 💡

Here is a list of excellent mental health services that are vetted and regulated that I share with the therapists I teach:

- 👨👨👦👦 Peer Support Groups - good relationships are one of the quickest ways to improve wellbeing. Rethink Mental Illness has a database of peer support groups across the UK.

- 📝 Samaritans Directory - the Samaritans, so often overlooked for the work they do, has a directory of organisations that specialise in different forms of distress. From abuse to sexual identity, this is a great place to start if you’re looking for specific forms of help.

- 💓 Hubofhope - A brilliant resource. Simply put in your postcode and it lists all the mental health services in your local area.

I love you all. 💋