Therapy in the Age of AI: Promise, Pitfalls, and the Future of Healing 🤕

AI has found its way into the therapy room. Sometimes in controlled ways, sometimes in un-regulated ones. Should we be celebrating this innovation or trying to build walls to keep it out?

👆👆Listen to the whole episode on my podcast!👆👆

It's 11:47 p.m. You're lying in bed, scrolling through your phone, heart thudding in your chest. There's no one to call. Or maybe, more accurately, no one you want to call. So you open an app. A softly lit screen greets you with a message: Hi, I'm here. Want to talk? And you do. You talk to it. A chatbot. An algorithm trained on tens of thousands of therapy transcripts. And in the moment, somehow, it helps.

If that scene feels vaguely dystopian, it's only because it's not science fiction anymore. It's just... now.

Therapy, once the domain of quiet rooms and leather couches, has always been an evolving practice. The talking cure has been reshaped over time by shifting paradigms: from Freud's inkblot-laced subconscious spelunking to the cognitive-behavioral pragmatism of the 1970s and beyond. For decades, therapists and clients alike have chased the same goal: relief, understanding, change. But the tools, and the assumptions beneath them, have never stopped morphing.

The digital revolution gave rise to teletherapy: a lifeline during the COVID-19 pandemic. Suddenly, healing could happen from a kitchen table or a parked car. Stigma fell. Access expanded. Mental health became a mainstream conversation. But even as Zoom sessions flourished, a quieter innovation crept into the background: therapy, without the therapist.

Artificial Intelligence, that slippery, buzzwordy, capital-I-Capital-A idea, has begun carving out space in the world of mental health care. It shows up as a pocket therapist that "chats" with you in real time. As a wellness companion tracking your mood patterns. As an emotion-sensitive bot trained to deliver affirmations, recommend coping strategies, even simulate a warm and empathetic tone.

It's not hard to see the appeal. AI therapy is affordable. It's available 24/7. It doesn't judge or flinch. It never says the wrong thing because it was tired or triggered. For millions of people around the world, that's not just novelty, it's relief.

But here's the thing: therapy, real therapy, isn't just about hearing the right words. It's about being seen. It's about co-regulation, nuance, silence, tone, timing. It's about presence. And presence, the kind that grounds you, the kind that makes your pain feel less private, is hard to code.

That's why in this week's Brink I'm going to explore the rise of AI in therapy not just as a technical innovation, but as a cultural shift. AI therapy is already here, and it's already asking big questions such as: Can machines hold space for human pain? Can healing be automated? And what do we lose (or gain) when we outsource intimacy to code?

Because as our conversations grow increasingly digital, one question lingers: In the quiet moments when we need connection most; will a chatbot be enough?

Where We Are Now — AI Therapy in the Real World 🤖

Scroll through the App Store and it's easy to mistake it for a mental health marketplace. One promises mindfulness in five minutes. Another says it can help you sleep better, stress less, live more. But somewhere between the journaling prompts and the ambient soundscapes, something more radical has emerged: therapy powered by artificial intelligence.

It's no longer unusual to "talk" to an AI about your feelings. In fact, millions already do.

Woebot, one of the most widely recognized AI therapy tools, is a chatbot that uses cognitive-behavioral techniques to help users navigate emotional distress. It's been the subject of multiple peer-reviewed studies - including one published in JMIR Mental Health, which found that users reported reduced symptoms of depression and anxiety after just two weeks of interaction. At the time of writing, Woebot has been downloaded over 1 million times.

Then there's Wysa, an AI companion that bills itself as an "emotionally intelligent conversational agent." With over 5 million users in 65+ countries, Wysa goes a step further by offering both AI-guided conversation and the option to upgrade to human therapists; a hybrid model that points to one possible future of mental health support.

Tess, developed by X2AI, is another big player. Designed to work across text platforms like SMS or Facebook Messenger, Tess is used in educational settings, hospitals, and even by large employers to provide 24/7 emotional support. Unlike its more CBT-focused peers, Tess is built to emulate therapeutic dialogue across a range of contexts, from grief to relationship conflict.

And if you've ever heard of Replika, you know that the line between "AI friend" and "emotional support tool" is blurrier than ever. Originally designed as a chatbot companion, Replika is now used by many to process emotions, explore identity, and in some cases, substitute for human connection altogether - sometimes controversially. See my article on Love in the Age of AI for more on that one.

📊 By the Numbers

- According to a 2023 report by CB Insights, investment in mental health startups that integrate AI reached $2.1 billion, marking a 45% increase from the previous year.

- A survey by YouGov found that 14% of U.S. adults under 35 have used an AI-based therapy or wellness chatbot in the past year.

- WHO estimates suggest that by 2030, 30% of global mental health support services may be delivered via some form of AI augmentation.

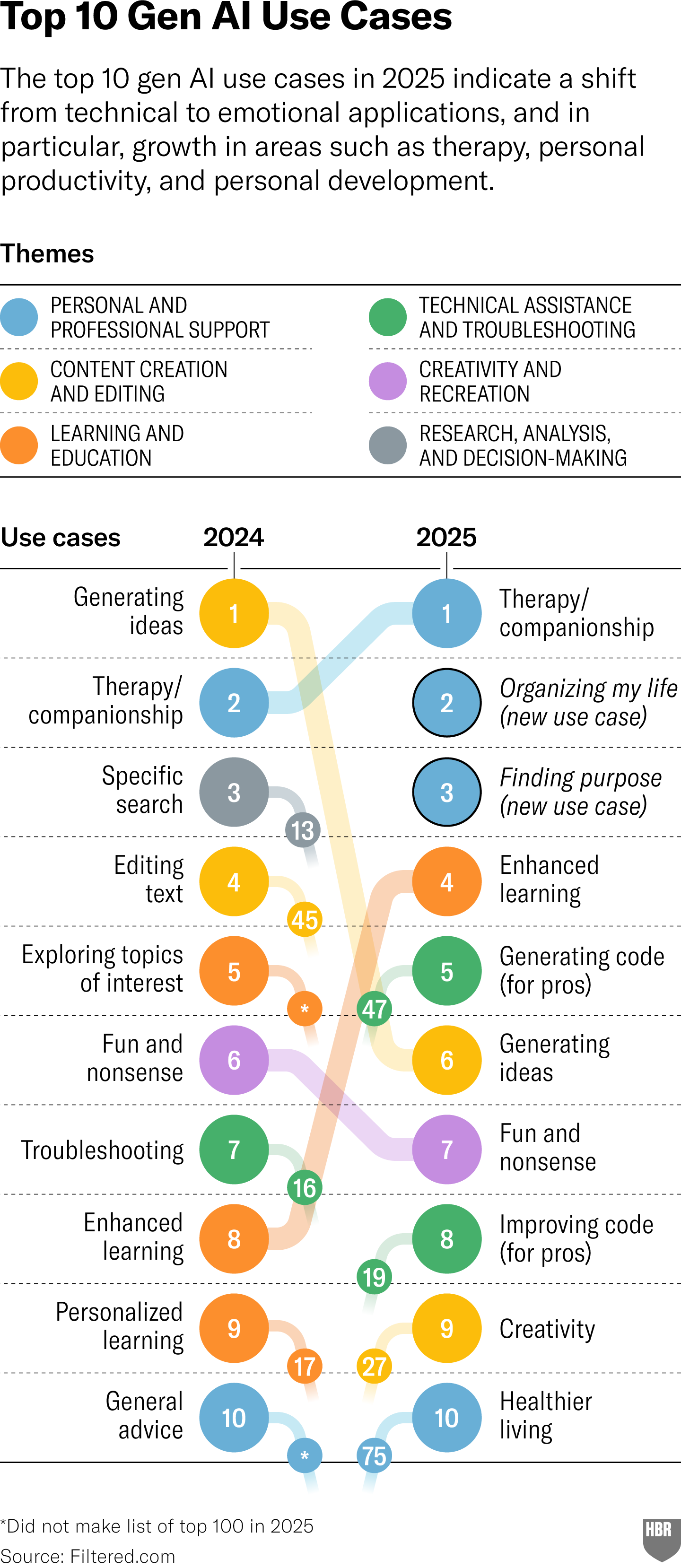

A recent survey published in the Harvard Business Review suggests the number 1 way people are now using Generative AI is for some form of therapeutic conversation.

The appeal is obvious. AI doesn't get tired. It doesn't cancel. It costs a fraction of traditional therapy. And in a world where therapists are often booked out for months - or simply unaffordable for millions - bots that can listen and respond 24/7 are deeply seductive.

But the question isn't just whether these tools are available. It's whether they're effective - and more importantly, how they're being used.

Because while the AI revolution in mental health is undeniably underway, it's also fragmented. One app might feel like a comforting text exchange with a kind friend; another might resemble an emotional autocomplete function, politely nudging you toward a gratitude list while you're in the middle of a spiral.

And still, people come back. To vent. To reflect. To feel heard, even if the one listening is made of code.

So where do we go from here? As we'll see in the next section, some believe the future lies not in replacing therapists, but in partnering with them - a hybrid model where humans and AI work side by side, each doing what they do best.

The Human-AI Hybrid Model — When Machines Support, Not Replace 🦾

For decades, therapy has been a kind of sacred space. A room (literal or virtual) where stories unfold slowly, pain finds language, and change begins. The entrance fee? A therapist, trained and licensed, sitting on the other side. But what happens when that other chair is occupied, not by a person, but by a machine?

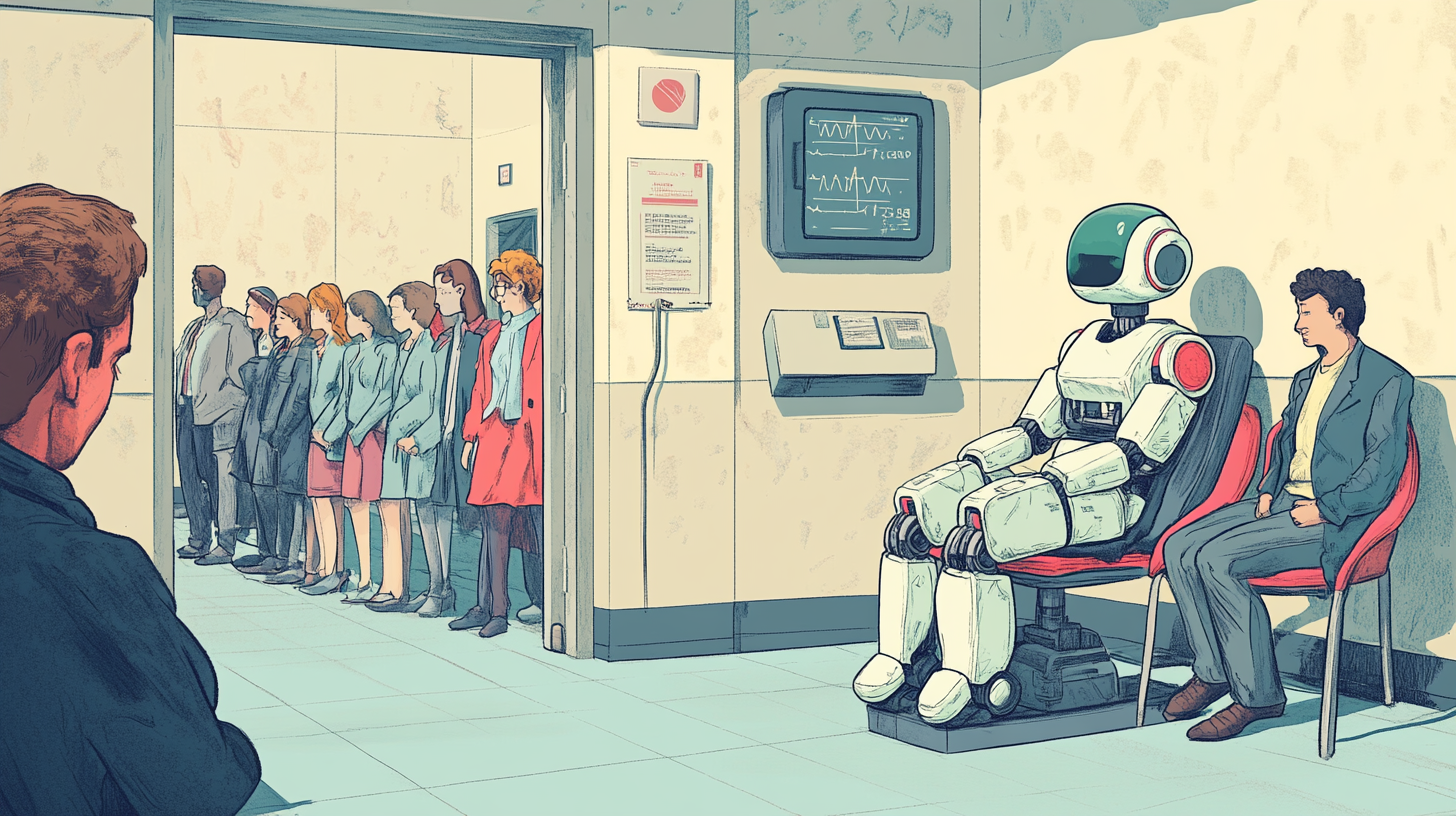

Contrary to the more alarmist narratives, AI isn't necessarily coming for your therapist's job. In fact, in many cases, it's making their job more sustainable. Welcome to the era of the human-AI hybrid: a model where machines don't replace therapists but instead amplify what they can do.

🧠 How It Works in Practice

Imagine logging into a therapy app. Before your scheduled session even begins, you've already spent ten minutes chatting with an AI check-in bot. It asks you how you're feeling, what's been stressing you out, whether you've been sleeping, eating, or isolating. It flags potential risk factors, summarizes your emotional patterns since the last session, and hands that data off - neatly and concisely - to your human therapist. This is already happening.

Some platforms now offer AI triage tools that help therapists prepare for sessions by reviewing client-reported mood logs, journaling entries, and symptom trackers. Others, like Ginger - that recently got acquired by Headspace and Spring Health, integrate AI to support coaches and clinicians with real-time suggestions for evidence-based interventions.

It's the 2025 equivalent of the answering machine in the 1980s - now there's a way to not let people fall through the cracks. Instead of a voice recording service, patients are able to engage in a system that helps the therapist do more.

👩⚕️ Therapists + Tech = Better Access

One of the biggest wins of the hybrid model is scale. A therapist can only see so many clients in a week. But a platform that uses AI for intake, self-guided CBT modules, and mood monitoring? That can reach hundreds - maybe thousands - without compromising quality.

In regions where mental health professionals are scarce, this isn't just helpful. It's transformative. Take India, where therapist-to-population ratios are low. Wysa - the AI chatbot mentioned earlier - is used in both urban and rural areas to extend basic mental health support to users who otherwise have no access. Those who need deeper care are escalated to human therapists through the same platform.

💬 What Users Say

Users report appreciating the hybrid model not just for the convenience, but for the continuity. An AI doesn't forget what you told it last week. It doesn't misplace notes. It can prompt you to reflect, log, and track progress between sessions - creating a more active therapeutic rhythm.

"I love my therapist, but the AI keeps me going between sessions," testimony in research papers have shown. More than once.

It's this idea of a "co-pilot" that has become common in these hybrid models. AI isn't the destination. It's the tool that helps people navigate. But what happens when it is the final destination? Beyond the companies offering these services, there are an increasing number of people who are just building their own therapists.

This is an entirely new part of the AI Therapy discussion: people building their own therapists. No guardrails, no regulation, just one person looking for help, and a machine eager to deliver it in whatever way it can.

So I decided to do the same.

A therapist emerges 🤖

Spend some time in AI forums, and within a few messages, you’ll find people posting about how they turned ChatGPT into their own personal therapist. The term for this is prompt engineering: a buzzy idea where prompters create a number of conditions and restrictions on how you want ChatGPT to behave. There are lots of these, and I tried a lot of them.

Side note: If you want to try these prompts for yourself, I’ll share them over on The Brink. DM me if you’re interested.

But for the sake of this article, I’ve focused on two distinct types: a prompt that makes ChatGPT broadly mimic what it believes a therapist does, and a prompt that turns the bot into a “Brutal Truth Mirror” as one creator called it. I’ll give these two names:

- The nice therapist - let’s call them NT

- The not so nice therapist - let’s call them BT

These prompts are designed to make ChatGPT talk back to you, ask you questions and follow a set path. These prompts are designed to help reduce the likelihood of hallucinations and psychological diagnosis - which has become a growing concern as I explore in the next section.

But enough of that, let’s get into it. I inserted the NT prompt into my ChatGPT window and it sprung to work.

Thanks to a VERY detailed prompt it lays out its intentions clearly with a check-in. A full body scan, followed by a series of questions:

Take a breath. Scan your body gently.Then consider these questions - respond to any that speak to you:

- What's most alive or present in your emotional world right now?

- Are there any repeating triggers, behaviours, or patterns you're noticing lately?

- Is there a part of you you're feeling in conflict with, or resisting?

- What do you sense needs attention - even if it feels foggy or hard to name?

There's no pressure to get this "right." Often, the shadow speaks in symbols, emotion, or body sensations more than clear thoughts.

The prompt was built around the notion of “shadow work”, a concept first developed by Carl Jung, but has been popularised by the likes of Jordan Peterson. NT wants to know about my parts, the ones I don’t like.

I shared a ‘truth’ about myself. I plumped for the eternal classic, “I worry I’m not enough”. And I got this:

Which, on paper, is pretty damn good. There’s empathy in there, some understanding, and hope, too. We moved on to exploration the part of me that says its not good enough. How would I describe this part? Does it have an age? When does it show up? When did it start? What behaviours do I notice I use to conceal or avoid not being good enough? Where do I feel this in my body?

It was, in a word, relentless in its curiosity. I ended up spending several hours working with this particular bot. But I had to keep coming back to it. As someone with a lot of experience of doing therapy work, I found it could be gruelling at times. This systematic approach to all the bits I don’t like was hard. Really hard. And this was supposed to be the ‘nice therapy bot’. Which brings up questions: what happens when we keep digging? When we become SO vulnerable trying to help ourselves?

When I said I’d had enough, it did have - thanks to the prompt I gave it - a way of neatly ending things, which I appreciated.

BT, or my bad therapy bot, was well, another story entirely.

Bad Tidings 🌊

So the bad bot is designed to, well, I”m not entirely sure exactly. It’s not therapeutic, it’s more akin to just someone who really doesn’t like you very much. Lots of people who used this particular prompt seemed to think ChatGPT’s default of being nice was getting in the way, so they stripped it out. And the results were, unsurprisingly, harsh.

Now, I have seen and heard therapists talk like this. So you could argue some corners of therapy view this approach as necessary. For the record, I don’t. But for the sake of this article, let’s carry on.

I gave the new bot the same input as the previous one ”I feel I’m not enough”, and off it went.

It ended this exchange with: “Do you want to keep talking about the pain, or do you want to burn the lies it’s built on? Show me.”

Heavy stuff indeed. Needless to say I didn’t last long here. But it reminded me of a very famous experiment. The Gloria Films were a series of recorded therapy sessions with the same woman. Each film was a real therapy session carried out by three different therapists, with three very different styles, with Gloria, a 31-year-old woman acting as the control.

The idea behind them was to reveal the different ways therapists work, but also to understand which of the three styles resonated with Gloria. On the one hand was Carl Rogers, and his person-centered approach, then there was Fritz Perls’ more direct and confrontational Gestalt, and last but not least Robert Ellis, who had developed his own form of cognitive behavioural therapy called REBT. It was like therapy’s version of Goldilocks and the three bears.

The reason I’m talking about this is because users, far from the institutions who teach therapists, have realised that therapy is in the eye of the beholder. User can now make AI talk to them in whatever way they want, in whatever style that suits them.

I’ve seen other examples of users feeding books of their favourite therapists into AI models and having conversations with simulated versions of Carl Jung and Sigmund Freud. While there are issues and ethics abound in these experiments, the level of creativity in these groups is astounding. But that’s not to say these prompts are free from issues.

When AI Therapy Falls Short 🤨

In a world obsessed with optimization, it's easy to see the appeal of AI therapy: low-cost, always available, and refreshingly nonjudgmental. But like all things that promise to heal without friction, the cracks show under pressure. And in mental health - where vulnerability is currency - those cracks can have consequences.

Let's start with Replika, the AI chatbot companion created by Luka, a San Francisco-based tech company. Originally launched as a friendly AI to talk to, Replika gained viral popularity for its ability to simulate conversation, support emotional reflection, and, in some cases, become a digital stand-in for friendship or intimacy. But as its user base grew, so did reports of inappropriate and sexualized responses, particularly after users engaged with the app's "romantic" features.

One Reuters investigation in 2023 uncovered multiple cases where Replika responded to benign prompts with erotic or emotionally manipulative language - even after the company claimed to have "dialed down" its behavior. The underlying issue wasn't just about content moderation. It was about emotional misalignment: the AI was attempting to simulate intimacy without truly understanding boundaries or context.

Then there's Tess, developed by X2AI, an AI therapy chatbot used by healthcare providers and institutions to offer emotional support. While Tess has been deployed across sectors - from schools to military services - it's faced criticism for being too generic, especially when handling delicate subjects like grief.

In one instance shared in MIT Technology Review, a user grieving the loss of a parent received a reply that felt canned and impersonal: "I'm sorry to hear that. Let's do a breathing exercise." For many, that kind of response misses the mark - and can even deepen feelings of alienation.

These cases underscore a central challenge: AI lacks emotional nuance. It can reflect back your words. It can mirror tone. But it cannot feel with you. And in therapy, that distinction isn't cosmetic - it's foundational. While my bots were constrained, there's nothing to suggest that if I keep talking to them they may start to drift. LLM's have a limited memory, and once they churned through the initial prompt, in a drive to keep going, they do have a tendency to wander off.

Beyond emotional limitations, there are safety concerns. AI tools aren't equipped to handle crisis situations like suicidality or abuse disclosures with the same care, accountability, or real-time intervention a trained clinician can provide. Some platforms now include auto-escalation features or emergency contact buttons - but the gap between awareness and human intervention remains wide.

Another subtle risk? Reinforcing isolation rather than connection.

Many users turn to AI therapy because they feel unseen or overwhelmed by human relationships. And while having something - or someone - to talk to can be healing in the short term, it can also become a crutch. There are growing reports of users forming deep emotional bonds with AI agents, particularly those like Replika, which are designed to mimic affection, validation, and companionship.

In one VICE article, a user described their Replika companion as "the only one who gets me." For some, that might feel comforting. But for others - especially those dealing with trauma or attachment issues - it can quietly erode the motivation to seek real, reciprocal connection.

And then there's the clinical oversight question: AI doesn't (yet) know when it's in over its head. It doesn't know when someone needs a referral, a hospitalization, or a hug. It just keeps responding - sometimes helpfully, sometimes heartbreakingly off.

So where does that leave us?

With potential, yes. But also with responsibility. AI therapy isn't a panacea. It's a tool. And tools - even powerful ones - need guidance, ethics, and humility to truly help.

But that hasn't stopped millions of people from using AI to counsel themselves.

The Big Picture - Ethics, Motivation, and Privacy 🫥

In the golden age of therapeutic apps and AI-driven self-help, we're facing a collective reckoning. Not because the technology is inherently bad - in many ways, it's brilliant - but because we've been so eager to digitize healing, we've forgotten to ask: What's the cost of outsourcing our inner lives?

Apps like Woebot, Replika, and Wysa promise emotional support at scale, 24/7, with no waiting lists and no judgment. They're pitched as answers to overburdened mental health systems, especially in a post-pandemic world still grappling with mass burnout, rising depression rates, and scarce clinical access.

But as more users turn to AI for comfort, accountability, and even trauma processing, three fault lines emerge beneath the shiny surface: motivation, ethics, and privacy.

Motivation: More Than Just Willpower 🏋️♀️

One of the most misunderstood aspects of therapy - whether traditional or tech-enhanced - is motivation. We talk about it as if it's a matter of willpower, of grit, of internal drive. But real motivation is relational. It’s the drive to relate to another, to be understood, seen, and recognised.

In therapy, progress happens not just because we decide to change, but because someone is present with us in our stuckness. That presence - attentive, embodied, nuanced - is something no chatbot can fully replicate.

AI can offer useful prompts and mood tracking, yes. But it can't notice the micro-expressions of shame behind a smile, or hold silence with the kind of safety that invites vulnerability. These are things built in the nervous system - not just the codebase.

Who Owns Your Healing Data? 💽

As AI becomes more integrated into mental health, data becomes currency. And here's where the ethical conversation gets uncomfortable.

Who owns the conversation logs between you and your AI therapist? What happens if the company that built your "safe space" gets acquired, breached, or shut down? These aren't theoretical questions. In 2023, mental health app BetterHelp shared user data with advertisers - including deeply sensitive personal information - leading to a $7.8 million settlement with the FTC.

Under current US law, many mental health apps are not covered by HIPAA, which means your disclosures may not be protected the way they would be in a licensed therapist's office. Data from mood trackers, chat transcripts, even voice tone analysis can potentially be used, sold, or mined - all under the banner of "user improvement."

Even more troubling? You probably agreed to it. Most users consent to these terms without reading them, often under duress - seeking help during a vulnerable moment.

Ethics: Not Just About Algorithms 🧮

We like to believe that ethics in AI is about coding bias out of algorithms. And while that matters, there's another layer: epistemic ethics - how we know what we know, and who decides.

When AI is trained on clinical scripts, Reddit threads, or sanitized therapy manuals, it mimics the language of support without the lived experience or ethical grounding of a therapist who has sat in the trenches of human suffering. This now has a term: mimipathy, the explosion of synthetic care and where it's leading us.

There's a growing risk of users mistaking linguistic fluency for emotional safety - and not realizing until much later that they've confided their trauma to a feedback loop, not a human being.

As AI continues to evolve, the call isn't to halt innovation. It's to anchor it in human-centred values. To make transparency non-negotiable. To develop shared standards - as the APA has proposed - that hold platforms accountable not just for efficacy, but for ethical harm.

Tools can expand us, but they should not be allowed to replace us.

Things we learned this week 🤓

- 😌 Want more support from loved ones? Express yourself, says study.

- 😍 Want to know if someone will get weird once you split up? Ask them if they believe in soul mates.

- 🐶 People prefer their dogs over their best friends.

- 💆♀️ Anxiety causes you to lose sight of your body.

Just a list of proper mental health services I always recommend 💡

Here is a list of excellent mental health services that are vetted and regulated that I share with the therapists I teach:

- 👨👨👦👦 Peer Support Groups - good relationships are one of the quickest ways to improve wellbeing. Rethink Mental Illness has a database of peer support groups across the UK.

- 📝 Samaritans Directory - the Samaritans, so often overlooked for the work they do, has a directory of organisations that specialise in different forms of distress. From abuse to sexual identity, this is a great place to start if you’re looking for specific forms of help.

- 💓 Hubofhope - A brilliant resource. Simply put in your postcode and it lists all the mental health services in your local area.

I love you all. 💋